Notion Fitnotes Integration

Nov 3, 2024 | Project

Technology

TL;DR

- Created a JavaScript integration that syncs exercise details between Notion and the FitNotes Android app using the app's SQLite back up file.

- Automated the process by triggering a cloud function when the backup file is uploaded to Google drive; The drive is accessed with a service account instead of typical OAuth authorization flow.

- Utilized the Prisma ORM by leveraging runtime database connection strings and the cloud function's in-memory file system to read downloaded SQLite files.

Why I Built This

If you’ve ever wandered into a gym before, you may have felt overwhelmed by the sheer number of contraptions you can use to torture your body. I know I certainly did!

To make things more manageable, I downloaded an Android app called FitNotes so that I could at least track what I was doing. Even though the UI felt a bit dated, I liked the app because it is basic, free, private, and customizable.

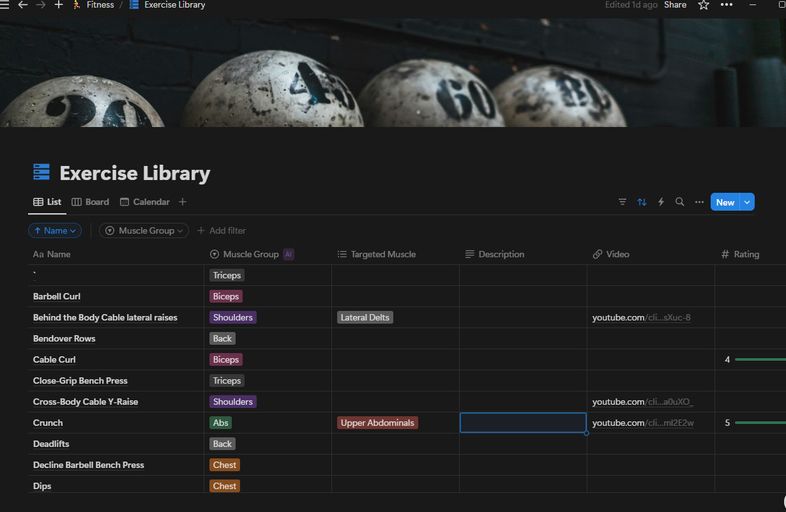

However, there are some features I wished It had like a description or video showing how to do the exercise. So I started poking around to see if I could find an automated way to add this information to the exercises.

The breakthrough came when I realized that the app’s backup file is just an SQLite database. This meant I could modify the file and restore it back into the app with my changes. That sparked an idea—what if I created a library of exercises in Notion and synced it with the FitNotes backup file?

Features

- A Javascript Notion integration that synchronizes exercises between a Notion database and the FitNotes Android app.

- The integration uses a Google Cloud function that is triggered when a FitNotes backup file is uploaded to Google Drive.

- During synchronization, fields such as description and video link URL from the Notion database are updated in the FitNotes database.

How it works

The integration leverages the FitNotes backup file—an SQLite database that can be exported from the app to Google Drive. I set up a specific Google Drive folder with a watch notification to trigger a cloud function when new files are uploaded.

When triggered, the function:

- Verifies the request's authenticity via a token

- Downloads the most recently uploaded file

- Synchronizes exercises using the Notion and FitNotes APIs

- Compares, matches, and updates exercise information

- Uploads the modified SQLite file back to a processed folder in Google Drive

Challenges

By far and away the most time-consuming part of this little project was figuring out Google Cloud, especially since it was my first time using it. I choose to use Google Cloud because I wanted a seamless integration with Google Drive.

While not inherently difficult, it did take some time navigating the documentation, deciphering the different versions of cloud functions, and configuring local testing.

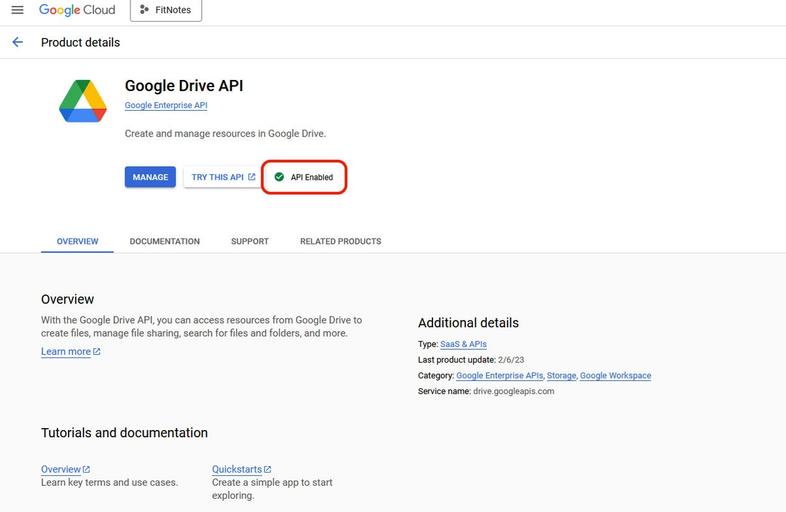

Accessing the Google Drive API without having an OAuth consent screen

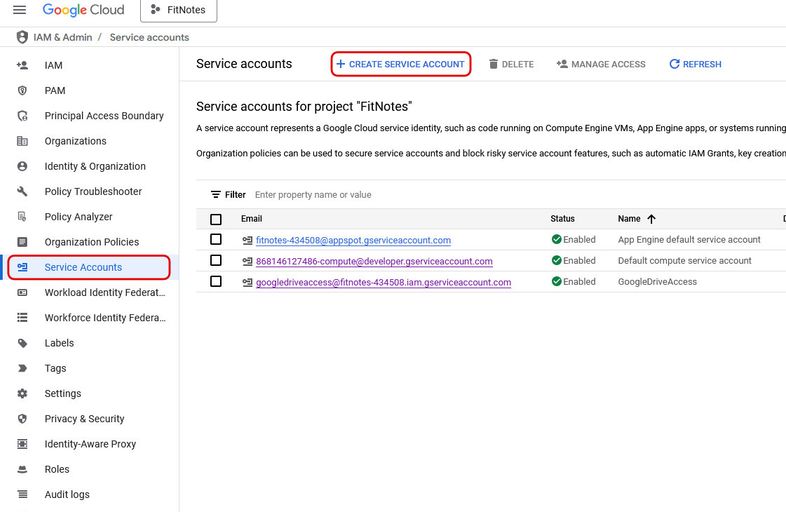

The quickstart documentation for Google Drive quickly pointed me in the wrong direction. It suggests using OAuth credentials to access Google Drive. In other words, I’d need to setup an application ID/API key and then during authorization, a screen pops asking a user if it OK if an app access your Google Drive. However, since this integration is a server-to-server interaction, I didn’t want the OAuth consent screen. This took a little digging, but I was able to bypass it with the following approach:

Go to the API library and make sure the Google Drive API is enabled for the project.

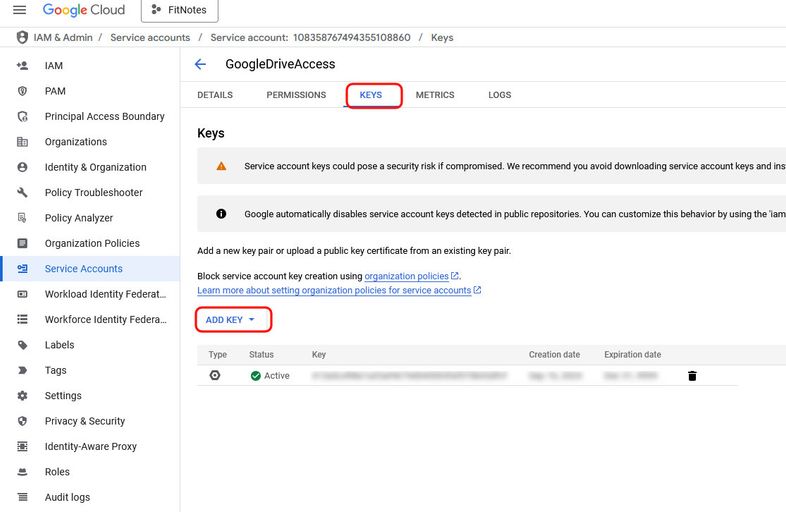

Create a service account in Google Cloud.

Click on the new service account and create a new key. This will download automatically when you create the key.

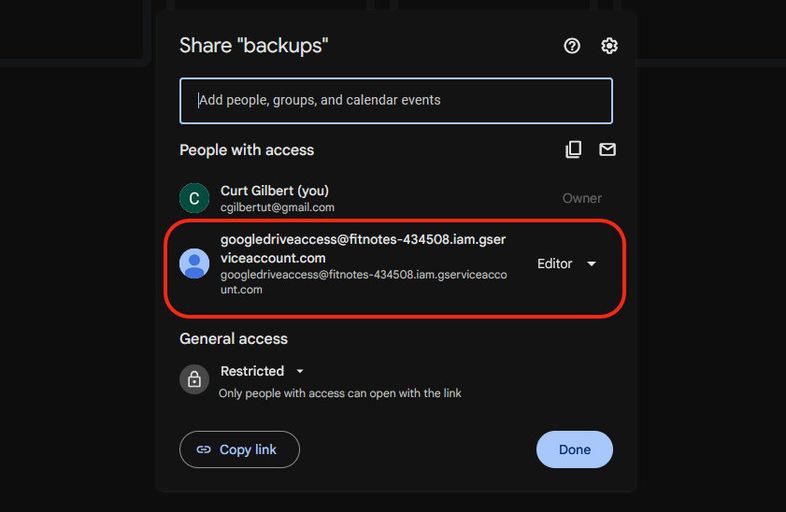

Finally make sure to “Share” my folder in Drive with the service account email.

And then to use the client, just make sure to use the service account downloaded previously.

DbClient.js

"use strict";

import { google } from "googleapis";

import path from "path";

let drive = undefined;

async function authorize() {

const auth = new google.auth.GoogleAuth({

keyFile: path.join(process.cwd(), "./credentials/service_account.json"),

scopes: ["<https://www.googleapis.com/auth/drive>"],

});

return auth.getClient();

}

export async function getDriveClient() {

if (drive) {

return drive;

}

const auth = await authorize();

drive = google.drive({ version: "v3", auth });

return drive;

}ORM access to a dynamically loaded SQLite database.

For this project, I used the Prisma ORM primarily because I was already familiar with it, and the project requirements were straightforward enough that I anticipated it would be my quickest implementation option. Creating a Prisma schema using the existing SQLite database file was straightforward.

I initially anticipated a potential challenge with the dynamic database connection string, which is typically stored in the prisma.schema file. I was uncertain whether there was a method to pass dynamic data to the schema, and even if such an option existed, regenerating the Prisma package in a production environment would be impractical.

Fortunately, I discovered that when creating the Prisma client in code, you can pass options to override the database connection string directly in the constructor.

DbClient.js

import { PrismaClient } from "@prisma/client";

let db = undefined;

export function generateDbConnection(path) {

if (!db) {

db = new PrismaClient({

datasources: {

db: {

url: `file:${path}`,

},

},

});

}

return db;

}Saving files in the cloud function

I was initially concerned about how to handle the SQLite file within the cloud function—specifically, how to store, access, modify, and then reupload it to Google Drive. Since the file was quite small, I wanted to avoid setting up a complex storage solution. Fortunately, I discovered that Google Cloud Functions provide an in-memory file systems that mimics a Linux environment. This meant I could simply download the file to the temporary (tmp) directory, work with it directly, and then proceed with my modifications.

Thoughts and Lessons Learned

Typescript Vs Javascript.

I initially planned to use TypeScript for the project but quickly pivoted to JavaScript. The time-consuming process of setting up type definitions, tooling, and library type configurations outweighed the potential benefits. For a small, personal project where I was the sole developer and didn't anticipate significant future modifications, JavaScript proved to be the more practical choice.

Google Cloud

This was my first hands-on experience with Google Cloud. While it shared similarities with other cloud providers I'd used, one feature stood out: the project-based resource organization. Unlike other platforms, Google Cloud provides a clear project indicator at the top of each service page, making it easy to track exactly which project you're working on.

In contrast, AWS feels significantly more complex when it comes to resource management. Organizing resources by project or region can be challenging, and following best practices often means creating separate accounts for different projects and environments—a process that can quickly become unwieldy and intricate.

Taking it Further

Leveraging automatic backup.

The FitNotes app does have an automatic backup feature that is currently in beta. However, these files can supposedly only seen or access by the FitNotes app (at least that is what the App says). However, it would be interesting to see if there is a way to see those files and watch those and make the process more automated.

Importing data into a fitness dashboard.

Another idea is to integrate this into a fitness dashboard that shows you your progress and chart. This dashboard could also integrate with other fitness apps or data (smartwatches, My Fitness Pal, etc.)

Conclusion

While this project didn't introduce groundbreaking technical learnings, it was an enjoyable exploration of automation and integration. Discovering how to modify an SQLite database and automate the process with a cloud function was a perfect way to pass time during my airport travels.